- Robert meets World

- Posts

- CLI -> GUI -> NLI: LLMs & Natural Language Computing

CLI -> GUI -> NLI: LLMs & Natural Language Computing

In a recent conversation between Naval Ravikant and Scott Adams, Naval made a remark that struck me and been on my mind since:

LLMs function as a form of natural language computing.

Much like the graphical user interface (GUI) revolutionized accessibility to computing, this shift in interaction paradigms can radically alter prior applications based on interface limitations.

The Evolution of Computing Interfaces

Bridging Intent and Execution

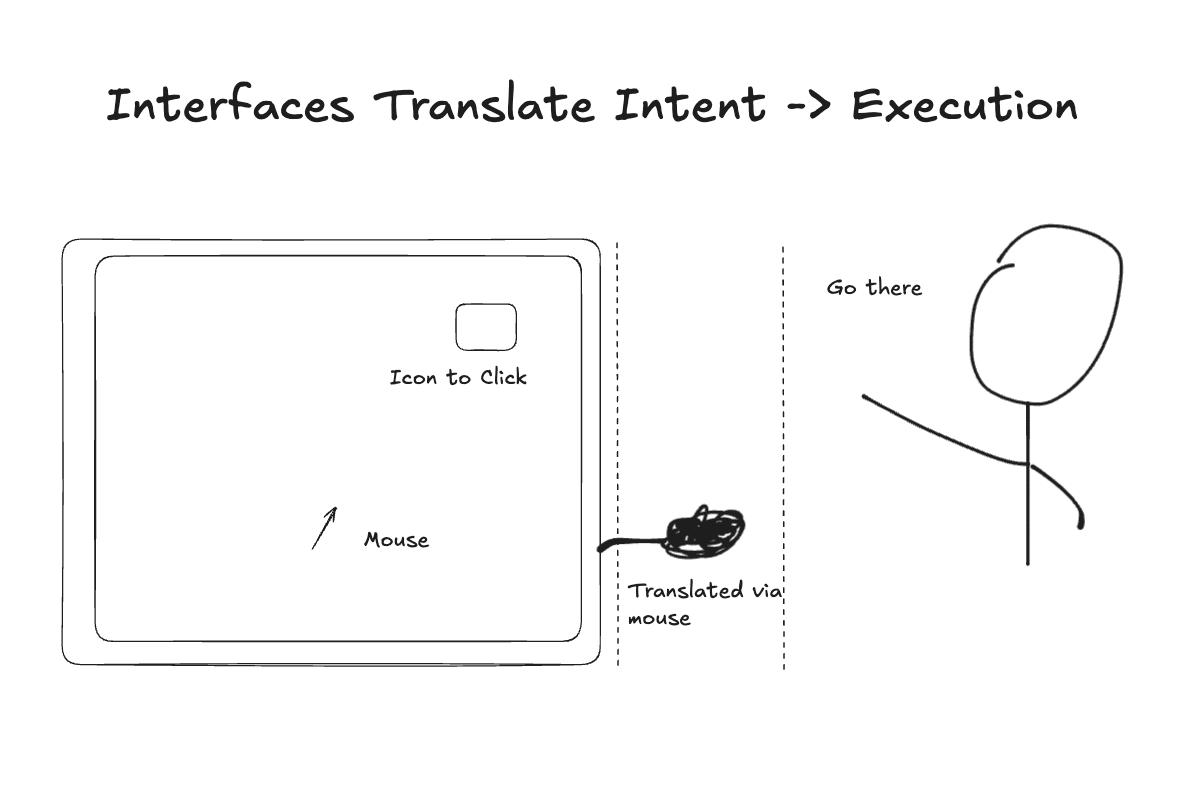

What is an interface?

In human computer interaction design, computing interface serves like a conduit between intention and machine execution.

Functionally, every development in interface is always aimed at reducing cognitive and mechanical effort, enabling users to achieve goals with greater efficiency.

Interfaces, as protocols, translate human intent into actionable computable commands, shaped by technological capabilities and human cognitive constraints.

Example

I want the clicker icon to go to the right hand corner of the GUI display to click the icon, so I move the mouse as an extension to communicate my intent to execution.

If I lived in the 60s, firstly there would be no GUI, but if there was I'd probably have to input a bunch of text commands like:

go_right 100px

go_up 200px

click

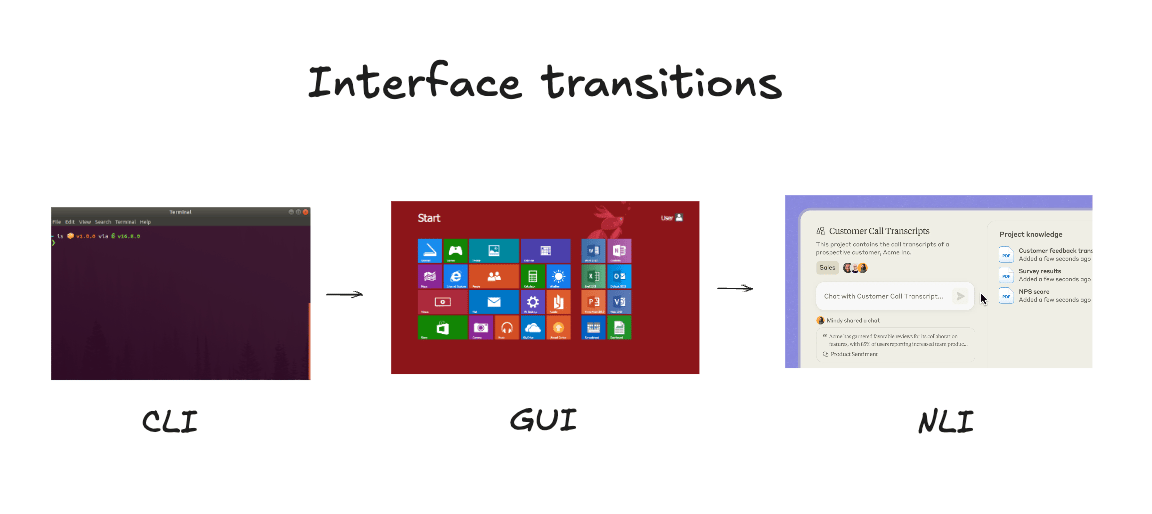

Three (approx.) Waves of Interface Evolution:

We've been evolving our interfaces for computing aggressively in the past few years and there's been different waves and different form facts (e.g., phone vs. computer). But let's look at the Natural Language Computing idea under these three waves.

1. Command Line Interface (CLI)

Text-based, requiring specific command

Powerful yet limited to technically skilled users

2. Graphical User Interface (GUI)

Visual, intuitive interaction

Expanded accessibility, enabling broad adoption of personal computing

3. Natural Language Interface (NLI)

Interactions in everyday language

Removes the technical barrier and need to build ad hoc visual intent systems

In the GUI era, while visuals helped bridge the gap, the intent to execution was still hampered by diversity of visual presentations.

Think: every banking website has the same design system (i.e., logout button, here's your balance, et cerea) but you still have learn to navigate each one and their nuances. Where the heck did they put the account statement again? (Always changing too!)

Language being the most universal interface of communication between each other, offers us a different and more intriguing direction for computing. Rather than logging into a bank website and navigating to your accounts screen, you can just query your balance, with hopefully less friction than phone banking

Innovation Happens When You Change The Rules

Each new interface paradigm spawns invention unthinkable under prior systems.

For instance:

GUI’s Impact: Enabled creative tools like Photoshop and visual based video games, unachievable with text-based commands.

NLI’s Potential: Unlocks applications such as:

Knowledge Work Automation: “Summarize meeting notes."

Creative Assistance: “Generate blog ideas on AI.”

Simplified System Configuration: “Set my calendar to alert me every Friday.”

By eliminating the need to translate human intent into structured syntax or controls under a particular GUI system, natural language interfaces reduce cognitive friction and expand possibilities.

GUI to NLI: A Paradigm Shift in Assumptions

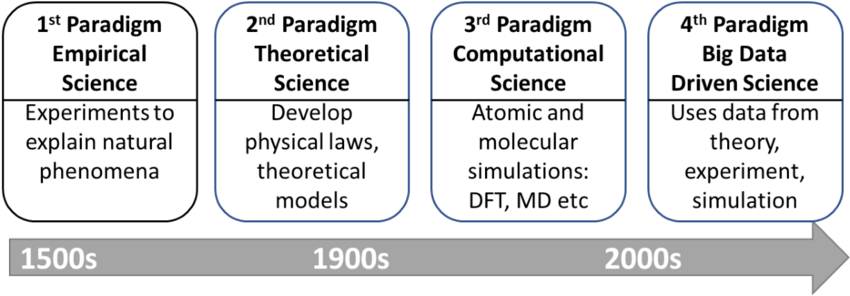

Donald Knuth's The Structure of Scientific Revolutions details the, now cliche, idea of paradigm shifts - with focus on the structure of scientific dogma transitions. Drawing from Knuth’s framework on paradigm shifts, the transition from graphical user interfaces (GUIs) to natural language interfaces (NLIs) represents a fundamental evolution in the assumptions that underlie human-computer interaction.

From Structured Input to Intent-Based Interaction

GUI Assumption: Users navigate predefined, structured pathways (icons, menus) to achieve goals. Actions are bounded by usability conventions and visual affordances.

NLI Assumption: Users articulate goals in natural language, leaving the system to infer, interpret, and execute intent. This presupposes a sophisticated interpretative capacity within the interface, reducing reliance on rigid pathways.

Analogy to Knuth:

In the same way that computational paradigms replaced manual calculations with algorithmic models, NLIs substitute the rigidity of GUI pathways with fluid, intent-driven workflows.

Just as algorithms redefined the scientific model from linear calculations to programmable systems, NLIs redefine the “user model” of interaction. Instead of users adapting to the system’s structure, the system adapts to the user’s intent.

From Explicit Control to Implicit Collaboration

GUI Assumption: Users maintain explicit, granular control over every action. Mastery of the interface is often a prerequisite for efficient use.

NLI Assumption: The system collaborates with users by inferring and adapting to intent, reducing the need for explicit commands or user mastery of system mechanics.

Analogy to Knuth:

This transition parallels the scientific movement from deterministic models to probabilistic reasoning.

Just as heuristic methods embraced ambiguity and used contextual inference to manage uncertainty, NLIs treat ambiguity in user intent as a feature.

Probabilistic reasoning and context-awareness allow NLIs to engage in adaptive collaboration, akin to how heuristic models refine scientific predictions.

From Localized Tasks to Contextual Systems

GUI Assumption: Interfaces are task-focused, with discrete, isolated functions optimized for specific purposes (e.g., word processing, graphic design).

NLI Assumption: Interfaces become context-aware and system-centric, integrating data, tools, and workflows into cohesive, purpose-driven processes.

Analogy to Knuth:

The emergence of computational methods enabled interdisciplinary fields like bioinformatics, dissolving the silos of traditional domains.

Similarly, NLIs unify application domains by interpreting tasks within a broader, system-wide context.

Broader Implications of the Shift

Epistemological Shift

In science, computational paradigms didn’t just answer old questions faster—they redefined what could be asked.

Likewise, NLIs shift how users frame problems. Instead of asking, “What tools do I need?” they ask, “What result am I aiming for?”

This change influences not just interaction but the very conceptualization of workflows and problem-solving.

Efficiency vs. Understanding

Knuth’s work highlighted a critical trade-off: as abstraction increases, efficiency improves, but understanding diminishes.

NLIs amplify this tension.

By prioritizing user empowerment and outcome-oriented interaction, they risk obscuring the intricate mechanics of systems they operate. Users gain convenience but potentially lose deep knowledge of the tools they rely on.

Conclusion

The GUI-to-NLI evolution, when viewed through Knuth’s lens, is not merely a technological enhancement but a profound change in the "rules" of human-computer interaction design.

Author AI transparency notice: This post was developed in collaboration with an AI language model. The core ideas and observations are my own, with AI assistance in refining the final text.